- 100 School

- Posts

- 💯 I was wrong. AI is eating the complex work first.

💯 I was wrong. AI is eating the complex work first.

Plus: 3 free live sessions to kickstart your AI year

I saw a post this week that made me laugh because it felt a little too real. Duncan laid out a very elaborate “system” for making AI writing sound human which mostly involved deleting everything and starting over.

It’s funny, but it also points to something very real. A lot of us are still figuring out where AI is helping us versus where it’s just adding noise or turning into slop. The gap between hype, anxiety, and real-world use is still pretty wide.

Which is why this caught my attention👇

Anthropic's new Economic Index Report 🤖

Look, I know. Another Anthropic report. But I can't help myself.

Here's the thing. I use AI to clear my "read later" list. It's the only way I keep up without drowning in AI FOMO. And this post from Mike (who's currently doing our 30 Days Of AI challenge) reminded me why.

So earlier this week, when Anthropic dropped their Economic Index Report that’s 40+ pages of dense data about how people are using Claude across 117 countries and millions of conversations, I did the same thing. I fed the whole thing to Claude (yes, the irony is not lost on me), asked the right questions, and pulled out what matters if you're trying to get better at AI.

Here's what stood out:

1. They finally measured how long AI can reliably work

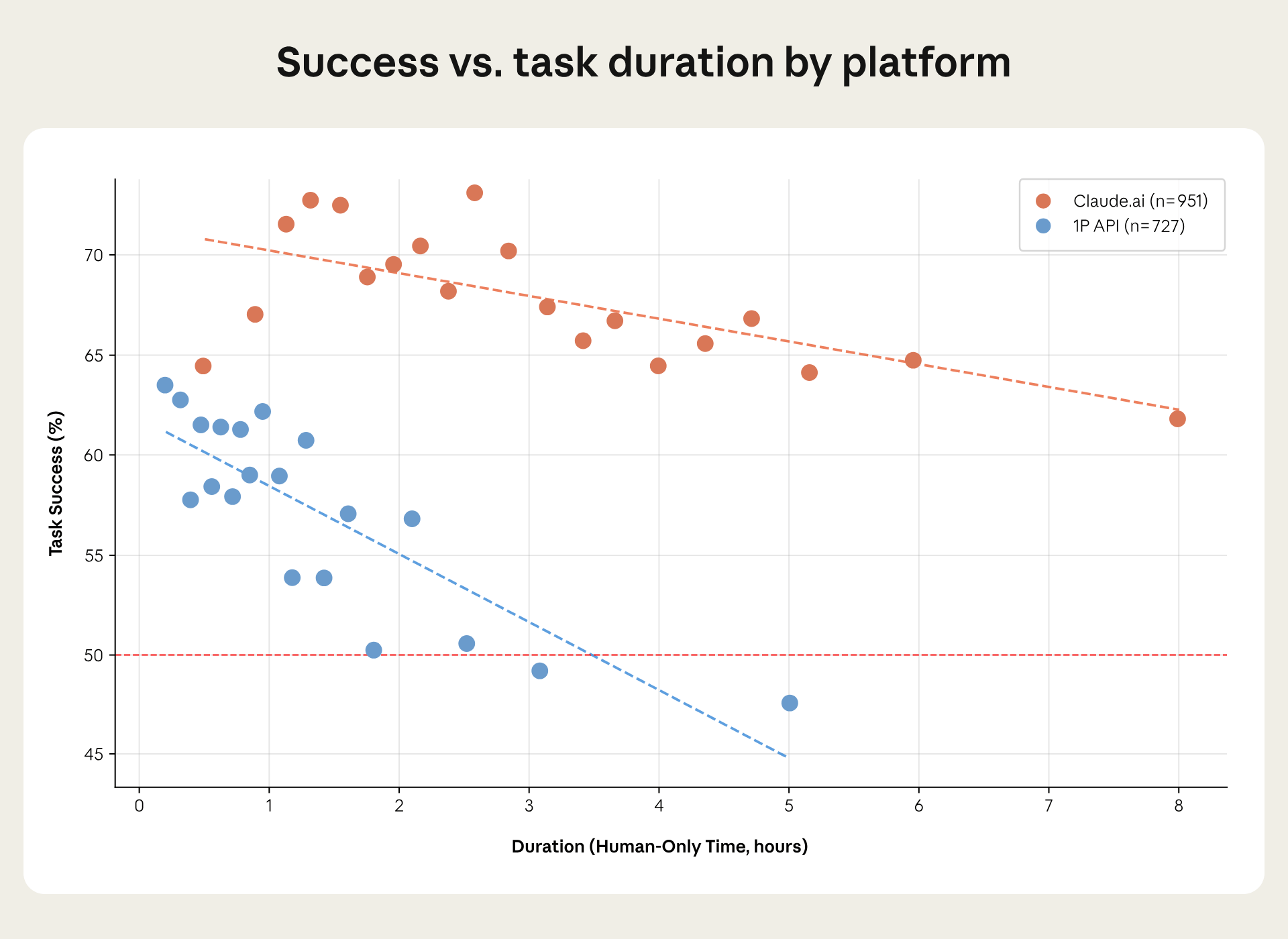

This is new. Previous reports tracked what people use Claude for. This one measures how long those tasks take and whether Claude succeeds.

Turns out, in multi-turn conversations (back-and-forth on Claude.ai), Claude maintains a 50% success rate on tasks that would take a human 19 hours. While on single-shot API calls, that drops to 3.5 hours.

That's a 5x difference. The conversation is the capability.

✅ Try this: stop treating AI like a vending machine. The best results come from conversation, not one perfect prompt. To get the best results, start optimizing your process. Break big tasks into phases. Let AI draft, then push back, then refine.

Orange = multi-turn conversations on Claude.ai. Blue = single-shot API calls. Notice how the orange line barely drops as tasks get longer, while blue falls fast. The takeaway here is iteration isn't just nice to have. It’s what makes AI reliable for complex work.

2. AI is taking on the complex work, not the boring work

I've been saying this for a while: let AI handle the boring stuff, keep the thinking for yourself. Turns out that's not quite how it's playing out.

Anthropic looked at which tasks people are actually using Claude for and found it's disproportionately the cognitively demanding work (research, analysis, planning, synthesis). What's being left to humans is often the more routine, transactional stuff.

For example, travel agents are using AI to plan complex itineraries, the part that requires judgment about what makes a good trip. What's left for the human is printing tickets and collecting payment. Now, this isn't true for every job. Real estate managers, for instance, are offloading bookkeeping to AI and keeping the contract negotiations and stakeholder conversations. But the overall trend is clear: AI isn't just eating the busywork. It's eating the cognitive work too.

✅ What this means for you: The moat isn't "I do the thinking, AI does the busywork." The moat is knowing which thinking to keep and getting better at the judgment AI can't do yet.

3. Collaboration is rising, not falling

After months of people trending toward "just let Claude handle it" mode, November saw a 5-point jump back toward collaborative use, iterating, learning, getting feedback, etc…

Anthropic thinks it's thanks to the new features like persistent memory, file creation, custom workflows. Tools that make Claude feel less like a search box and more like a coworker.

And speaking of AI becoming more of a working partner Anthropic just launched Cowork, basically Claude Code for non-technical work. Browser automation, data connectors, file management. It's early and raw, but the direction is clear: AI is moving from "answer my question" to "help me do my job."

4. How you show up is how AI shows up

The correlation between prompt sophistication and response sophistication is 0.92. That's near perfect. Higher-education tasks show bigger productivity gains. Not because AI is smarter for smart people, but because those tasks come with clearer problem definitions and better context.

✅ Try this: before prompting, write down: what's the actual problem? what does good look like? what context would a smart colleague need? The 5 minutes you spend on this saves 30 minutes of mediocre outputs.

If you want to dig deeper, you can read the full report here.

Free live AI Sessions ⚡️

If you want to explore this stuff live (and ask messy, real questions), Harold, Ciara, and our friends from Create With are hosting 3 free live intro sessions in the next week, to help non-technical professionals kickstart the new AI year.

20/01: Automation 101

21/01: Vibe Coding 101

27/01: Claude Code 101

Quick note if you’re reading this from work 💻

We’ve been running AI trainings for teams who want their people building practical workflows. If that’s relevant for you, you can poke around here and see if it makes sense.

When teams go through this together, a few things shift fast:

Standards start forming naturally.

People stop hiding how they’re using AI from their team.

“Is this good?” becomes a shared conversation instead of a private doubt spiral.

Before you go ✌️

Curious what part of your work AI is already doing better than you expected. Hit reply if you’ve noticed it.

Max 👋

P.S. Want to make your team & company AI-first? Let us help here.